3Vs of Big Data: Volume, Velocity, Variety

3Vs of Big Data: Volume, Velocity, Variety

The concept of Big Data is best understood through its three key characteristics, often referred to as the 3Vs: Volume, Velocity, and Variety. These dimensions help us describe the challenges and features of managing and analyzing Big Data effectively.

1. Volume – The Scale of Data

Volume refers to the massive amount of data generated every second. It’s not about gigabytes or terabytes anymore — we’re now talking in petabytes and even exabytes.

Example: YouTube

YouTube users upload more than 500 hours of video content every minute. That adds up to 720,000 hours of video every day. Processing and storing this vast volume requires scalable, distributed systems like Apache Spark and Hadoop.

Question:

Can a single computer store all of YouTube’s daily uploads?

Answer:

No. A single computer doesn’t have enough storage or processing power. That’s why data is distributed across many machines in data centers.

Python Example – Simulating Large Volume

Let’s simulate the idea of handling large volume by generating a DataFrame with 1 million rows using pandas.

import pandas as pd

import numpy as np

# Simulate 1 million rows of numeric data

rows = 1000000

df = pd.DataFrame({

'user_id': np.random.randint(1, 100000, size=rows),

'watch_time_minutes': np.random.randint(1, 60, size=rows)

})

# Show basic info

print(df.info())

print(df.head())RangeIndex: 1000000 entries, 0 to 999999 Data columns (total 2 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 user_id 1000000 non-null int64 1 watch_time_minutes 1000000 non-null int64 dtypes: int64(2) memory usage: 15.3 MB

This example shows how even with 1 million records, memory usage grows fast. Now imagine billions — that's Big Data Volume in action.

2. Velocity – The Speed of Data

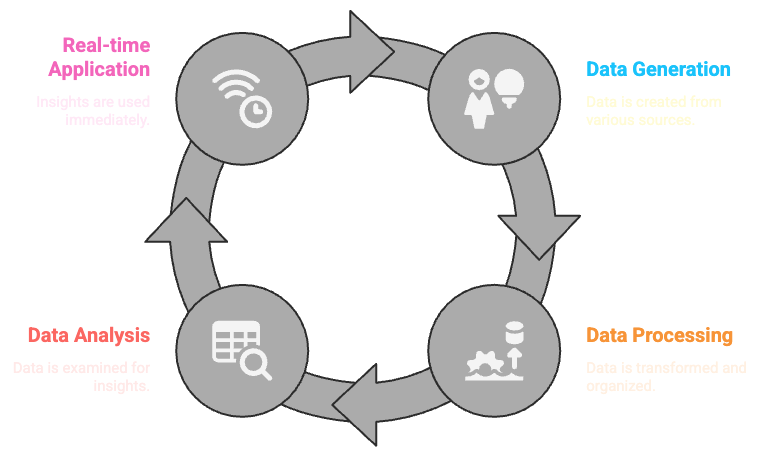

Velocity is the speed at which data is generated, processed, and analyzed. In today's world, real-time or near real-time processing is important for many industries.

Example: Stock Market

Stock prices change every millisecond. Thousands of buy/sell orders are placed each second. Traders need real-time analytics to make decisions. Big Data tools help process and analyze this high-velocity data without delay.

Question:

Why is velocity important in fraud detection systems?

Answer:

If a system takes too long to detect fraud, the damage might already be done. High-velocity data analysis helps spot and block suspicious activity in real time.

Python Example – Simulating Fast Data Stream

Here’s a simple loop that mimics streaming data from a sensor every second.

import time

import random

print("Streaming sensor data...")

for i in range(5): # simulate 5 data points

temperature = random.uniform(20.0, 25.0)

print(f"Reading {i+1}: Temperature = {temperature:.2f} °C")

time.sleep(1)Streaming sensor data... Reading 1: Temperature = 22.13 °C Reading 2: Temperature = 20.74 °C Reading 3: Temperature = 24.21 °C Reading 4: Temperature = 21.67 °C Reading 5: Temperature = 23.02 °C

This small demo helps build the intuition for what real-time streaming looks like. Tools like Apache Spark Streaming are designed to handle such data at massive scales and speeds.

3. Variety – The Diversity of Data Types

Variety refers to the different forms and formats of data — structured, semi-structured, and unstructured.

- Structured: Data in rows and columns (e.g., Excel, SQL tables)

- Semi-structured: Data with tags but no fixed schema (e.g., JSON, XML)

- Unstructured: Data like images, videos, PDFs, audio recordings

Example: A Medical Record System

- Patient details stored in tables (structured)

- Doctor’s notes stored in JSON format (semi-structured)

- X-rays, MRI scans, and audio dictations (unstructured)

Big Data platforms can integrate and analyze all these formats together to give meaningful insights, like predicting disease risks based on a combination of structured and unstructured data.

Question:

Can traditional databases store videos and images efficiently?

Answer:

No. Traditional relational databases are optimized for structured tabular data. Unstructured formats require different storage and retrieval mechanisms, which Big Data systems support.

Summary

The 3Vs — Volume, Velocity, and Variety — are foundational to understanding what makes Big Data unique. These challenges push us to use advanced tools like Apache Spark, which are built to handle scale, speed, and complexity. A strong grasp of these concepts will prepare you to work with real-world data efficiently and meaningfully.

Comments

Loading comments...